- Published on

Reflecting on 2025 and Looking Ahead: LLM-Centric Software Development

- Authors

- Name

- Ajeet Kumar Singh

2025 was a turning point in my journey with software development. I felt its impact on two levels—first, in how I personally approached building software, and then in how I guided my team through meaningful change.

On a personal level, AI reshaped how I approached development. It challenged the long-established practice of writing code in isolation and pushed me to explore smarter, more collaborative ways to design, build, and deliver systems. All of this unfolded under the familiar "do more with less" paradox—Read more here.

As I adapted, my focus naturally shifted to the team. The goal was clear: help everyone adopt AI effectively and sustainably. We avoided chasing every flashy new LLM or tool. Instead, we embraced a principle that quickly became our guiding slogan: "Use existing tools as they fit you." Rather than waiting for the "next best" model to magically fix our code, we encouraged developers to master what they already had, like GitHub Copilot offerings.

To make this actionable, we followed a set of practices:

- Conduct targeted training sessions to help developers learn how to collaborate effectively with AI

- Provide clear context and specifications to AI, using documentation, tickets, and relevant code snippets

- Break work into small, well-defined tasks and monitor AI output closely

- Generate tests alongside code and prune or simplify before committing

- Identify areas of code best suited for AI assistance

- Establish a standard context/spec format and review workflow

- Pilot one feature at a time, track results, and refine processes iteratively

One moment that stands out involved a massive dataset spanning several months and multiple sources. Historically, analyzing it would have taken days of coding, debugging, and iteration. In 2025, I approached it differently. I guided AI to handle the heavy lifting—defining objectives, validating outputs, and iterating methodically. In under an hour, insights emerged that would have otherwise taken days.

This experience shifted my mental model: I now approach complex problems by first asking "How would I explain this to an AI?" which paradoxically makes me clearer about my own objectives.

This wasn't an isolated moment. It was the culmination of several years of rapid, compounding progress in AI tooling—progress that's easy to underestimate when you're living through it.

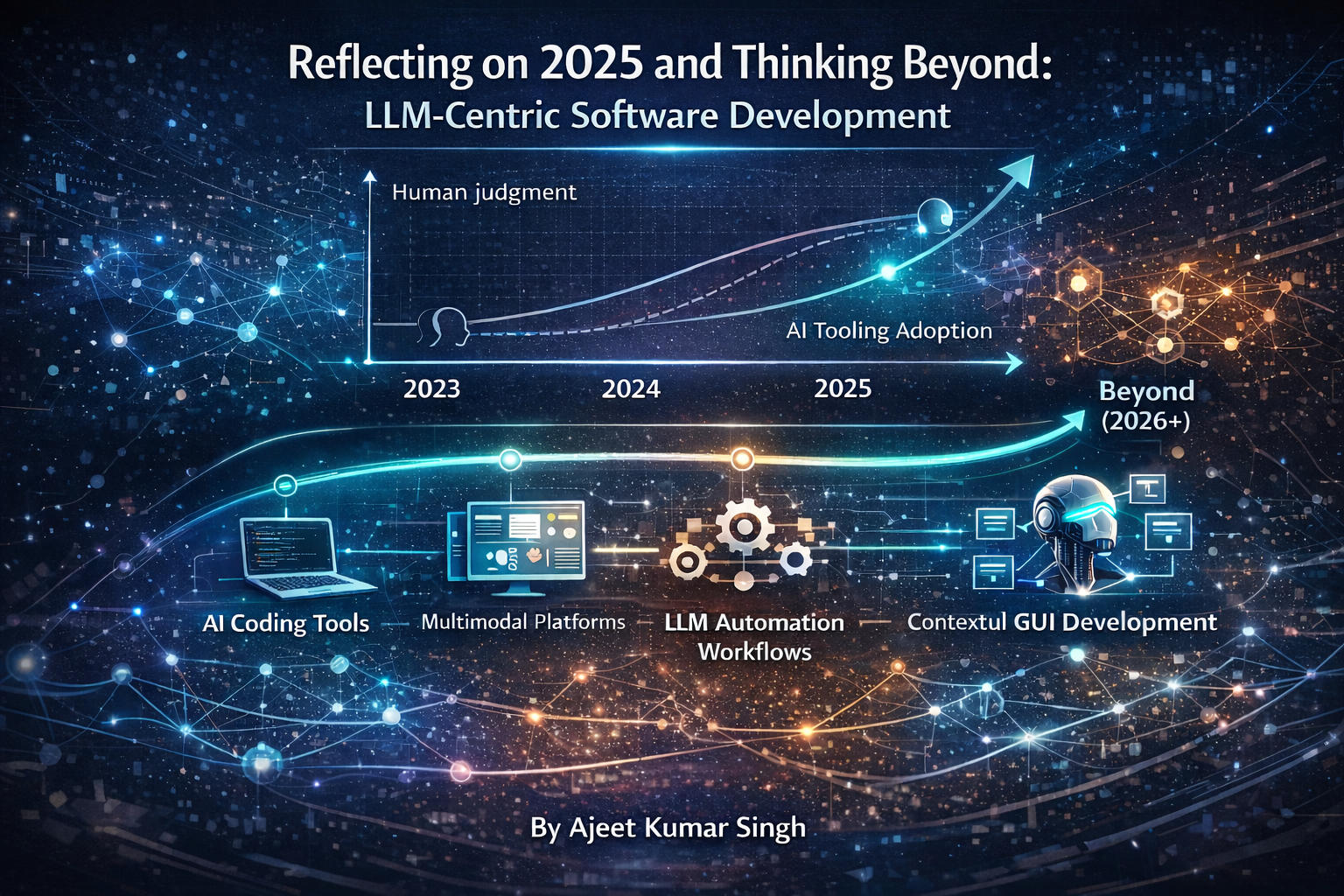

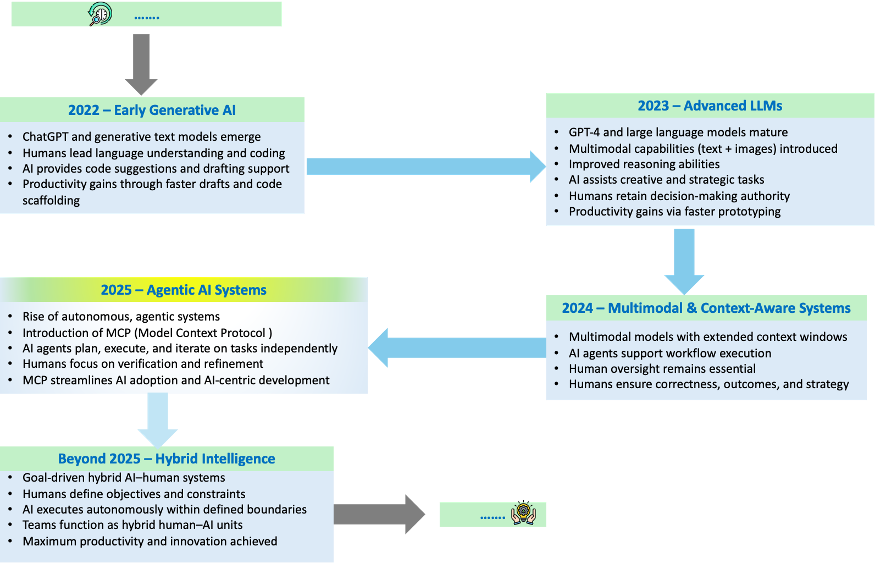

To put this evolution into perspective, the following timeline highlights key advancements in AI-assisted software development that I've been exploring.

2025 Showed a Significant Increase in Developer AI Adoption

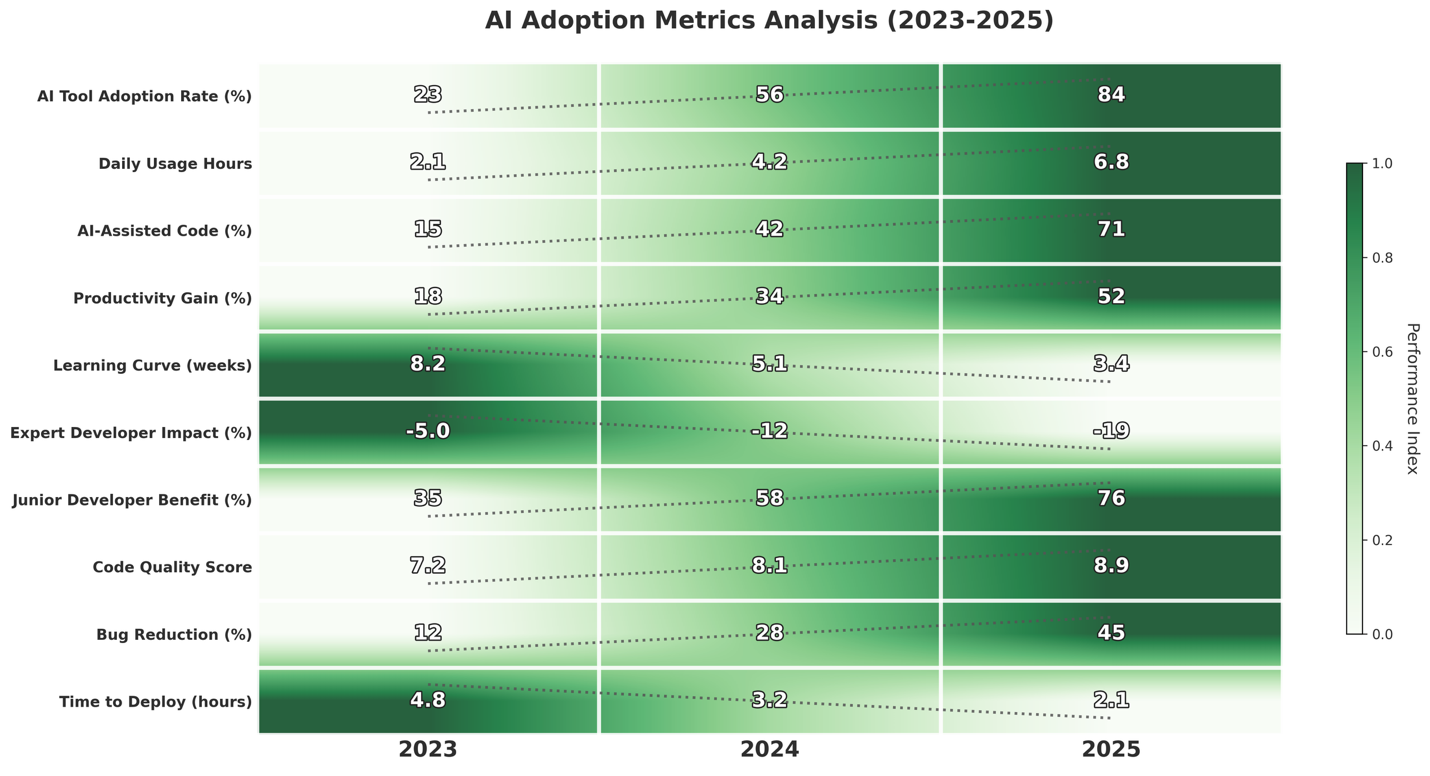

By 2025, AI adoption among developers had reached a remarkable level. Multiple surveys ¹ ² indicate that using AI tools in software development has become nearly universal, reflecting a steady increase over the past three years.

From my personal experience, I've observed that my own productivity increased by approximately 35-40% in routine coding tasks when using GitHub Copilot consistently. However, this came with trade-offs—I found myself spending about 10-15% more time on code review and validation to ensure AI-generated outputs met quality standards.

The most surprising personal insight was that AI didn't just make me faster at writing code—it fundamentally changed how I approached problem-solving. Rather than immediately jumping into implementation, I began spending more time on architecture and design thinking, knowing that AI could handle much of the execution.

The data shows how AI has moved from a helpful assistant to an integral part of daily development workflows ¹ ².

AI as a Daily Collaborator Became the New Normal in 2025

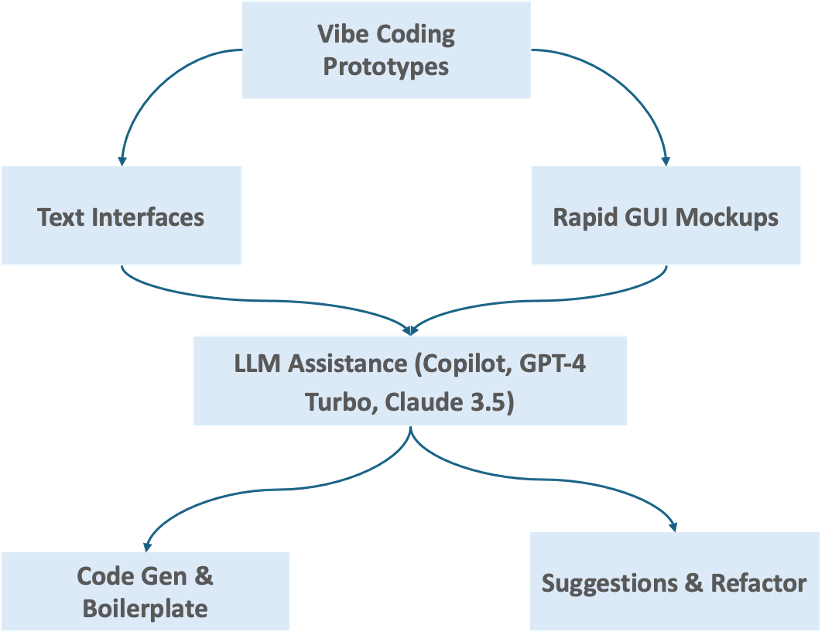

By 2025, tools like GitHub Copilot and LLMs such as GPT-4 Turbo and Claude 3.5 had become indispensable. In my daily workflow, I found myself using AI tools for approximately 60-70% of my coding sessions, with the highest value coming from:

- Boilerplate generation (significantly reduced repetitive coding tasks)

- API integration and error handling (streamlined debugging and integration workflows)

- Test case generation (substantially improved test coverage and quality)

- Documentation writing (dramatically accelerated documentation creation)

One personal breakthrough was discovering vibe coding—describing desired behaviors in natural language and getting working prototypes almost instantly. This approach proved particularly effective for proof-of-concept work, where I could validate ideas in minutes rather than hours.

Personal opinion: The most underrated benefit of AI in development isn't speed—it's creative confidence. Knowing that I could rapidly prototype and iterate gave me permission to explore more ambitious ideas and take calculated technical risks.

Over time, using AI tools became second nature, and my workflow expanded beyond a single assistant. In 2025, I routinely relied on multiple AI tools, each suited to different tasks—from in-editor support to deeper reasoning, refactoring, and UI generation.

This approach increased solution diversity by roughly 50% compared to 2024, as different models excelled at different problems. At the same time, it introduced a new kind of overhead: context switching between tools required mental effort that pure coding rarely did.

MCPs Became Central to AI Adoption and Scalability

In 2025, the Model Context Protocol (MCP)—a standardized framework for connecting AI models to external tools and data sources—took the AI world by storm, becoming the go-to trend for connecting models to real-world tools and data ⁴.

Personal assessment: MCP was a game-changer for my workflow. Before MCP, I spent considerable time building custom integrations between AI tools and my development environment. With MCP, I could connect Claude directly to my local filesystem, databases, and APIs with minimal setup.

In one specific project, MCP enabled me to create an AI agent that could:

- Read my existing codebase context

- Query my PostgreSQL database schema

- Generate migration scripts

- Validate the scripts against existing data

This workflow, which previously took me 2-3 hours of manual work, was reduced to 20-30 minutes of guided AI collaboration.

Instead of relying on a jumble of custom APIs, MCP offered a unified, standardized way for AI to perform meaningful work. Cloud platforms were quick to adopt it.

On AWS, for example, the launch of an MCP Proxy let AI agents securely tap into AWS-hosted MCP servers, giving them the ability to interact directly with S3 buckets, RDS databases, and other resources.

Early 2025 Marked the Rise of Agent-Centric Workflows

By 2025, autonomous collaboration had become a reality. AI agents—autonomous software systems that can perform tasks independently—were no longer just suggesting snippets—they were handling end-to-end tasks.

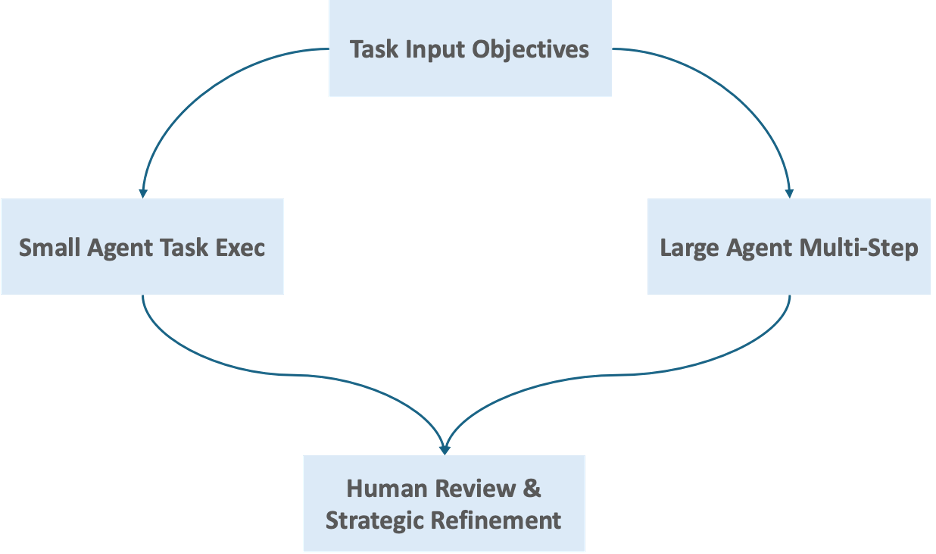

As discussed in one of my development workflows, agents aggregated data from multiple APIs, performed data cleaning, and generated reports, allowing the team to focus on validating results and making high-level strategic decisions.

GitHub Copilot quickly became a trusted partner in daily development. Beyond generating boilerplate code, it could refactor functions, create tests, and even assist with pull requests, helping the team move faster without sacrificing quality.

Developers could focus on higher-value work like designing architectures, experimenting with features, and innovating, while Copilot took care of routine coding tasks.

At the same time, Cursor introduced a new level of coordination. By enabling multi-agent reasoning across large codebases, it allowed the team to tackle complex changes confidently.

Cursor performed "context engineering" to ensure AI understood the task environment, orchestrated multiple LLM calls in sophisticated sequences, provided human-friendly GUIs for oversight, and offered an "autonomy slider" to control AI independence. Suddenly, what once took hours of careful manual integration could be executed with far greater speed and consistency.

Meanwhile, Claude Code (CC) brought a new paradigm of agentic AI—AI that can act autonomously to complete tasks. Unlike early cloud-first agents, CC ran locally on the developer's machine, leveraging the existing environment, data, configuration, and context.

Its lightweight CLI interface offered low-latency interaction, turning AI into a "resident assistant" that felt alive within the workflow rather than a distant, abstract service. Developers could experiment, iterate, and validate changes in real time, seamlessly blending human judgment with AI execution.

Together, these tools illustrated the emerging frontier of software development: AI not just as a helper, but as a capable, context-aware collaborator, allowing humans to focus on creativity, strategy, and high-impact decision-making while AI handled execution at scale.

Lessons Learned in 2025

Looking back, 2025 was the year software development truly changed for me. It was the first time I felt that I was no longer coding alone.

AI had become a constant presence—helping while coding, reviewing changes, and even identifying and fixing bugs. In some moments, it felt like having an extra pair of hands, capable of taking on heavy lifting and stitching together autonomous workflows that could carry tasks from start to finish.

When everything aligned, the gains were obvious. Work moved faster. Repetitive tasks faded into the background. The promise of AI-augmented development felt real.

But that promise came with friction. A surprising amount of time was spent not building software, but instructing the AI on what not to do.

Personal reflection: I tracked my AI interaction patterns for 3 months and discovered that ~15% of my time with AI tools was spent on prompt refinement and correction rather than productive coding. The most common issues I encountered:

- Hallucinated dependencies: AI suggesting packages that don't exist

- Outdated patterns: AI using deprecated APIs or syntax

- Context drift: AI losing track of project requirements mid-conversation

- Over-engineering: AI adding unnecessary complexity when simple solutions sufficed

The lack of verifiability and explainability made it hard to fully trust autonomous workflows. My confidence in AI-generated code fluctuated throughout the year as I learned to better calibrate my expectations and develop more effective verification strategies.

What became clear is that AI alone does not create value—clarity does. Without transparency into what AI tools are doing and how their outputs are generated, trust erodes quickly. The biggest shift in 2025 wasn't about better prompts; it was about learning to define clear objectives and measurable outcomes.

Over time, several lessons stood out. AI is excellent at repeatable, measurable tasks, but human oversight remains essential. Judgment, context, and accountability cannot be automated away.

Key patterns that emerged:

- Time to first working prototype: Significantly faster with AI assistance

- Code review requirements: AI-generated code needed more careful validation initially

- Feature iteration: Much quicker once AI workflows became established

- Learning new technologies: AI proved invaluable as a learning companion

Research insight: The productivity gains from AI are real but unevenly distributed. Studies show that experienced developers tend to see substantial productivity improvements, while less experienced developers may initially struggle with over-reliance and insufficient verification skills ³.

Measuring impact also proved harder than expected. Without clear personal KPIs—such as features delivered per sprint, code quality metrics, or time spent on different activities—discussions about AI value remained largely anecdotal.

Another lesson was personal but important: chasing every new LLM release is a distraction. I spent the first quarter of 2025 constantly switching between GPT-4, Claude, Gemini, and others. Real progress came from mastering one tool deeply rather than sampling everything superficially.

Personal productivity framework that emerged:

- Use AI for exploration (architecture, possibilities, alternatives)

- Human-verify all critical paths (security, performance, business logic)

- Automate repetitive tasks (tests, documentation, boilerplate)

- Keep humans in the loop for design decisions and trade-offs

By the end of 2025, my perspective had shifted. AI in software development is not about replacing developers or automating everything. It is about augmenting human capability, balancing autonomy with verification, and grounding innovation in transparency and measurable results. The teams that succeed will be the ones that treat AI not as a shortcut, but as a collaborator—used intentionally, thoughtfully, and with clear purpose.

Looking Beyond: 2026 and the Untapped Frontier

By 2026, software development is entering a new era defined by hybrid agentic systems, domain-specific LLMs, advanced multimodal interfaces, and GUI-driven interactive tools.

The focus will be on scaling intelligence in development workflows, enabling verifiable autonomous coding processes, and improving usability to unlock the full potential of AI-augmented software engineering.

With further advances in scalability, AI tools will support goal-driven development: developers define software objectives, constraints, and desired outcomes, while AI systems autonomously plan, write, test, and iterate code toward these goals.

Humans remain essential for strategic oversight, code review, validation, and high-level architectural decisions.

Verifiability and measurable outcomes remain critical in development. AI tools will evolve to make software results more deterministic, testable, and explainable, ensuring reliable, accountable delivery.

Tools like Nano Banana (Google Gemini feature) demonstrate the next frontier: interactive AI development interfaces that understand system diagrams, analyze codebases, suggest improvements, and cross-reference metrics in real time. Human and AI collaboration becomes seamless, transforming software creation into an intelligent, interactive development dialogue.

The future is not just faster coding—it is strategic software intelligence in motion, where developer vision and AI autonomy converge to redefine what is possible in software development.

What Caught My Attention This Week

As someone tracking AI development closely, here are two things that stood out:

-

Google's remarkable turnaround - Google, which started 2025 behind competitors, ended the year leading the AI race with major model and product advances (Read more)

-

Great educational resource - For those interested in transformer models and architecture, there's an excellent interactive explainer available (Read more)

References

-

Developer AI Adoption: Stack Overflow Developer Survey 2025: 84% of developers now use or plan to use AI tools (up from 76% in 2024), and 51% of professional developers use AI tools daily (Stack Overflow Survey)

-

GitHub Copilot Usage Statistics: GitHub reported that developers accept 35% of Copilot suggestions, with variance based on programming language and experience level (GitHub Blog)

-

AI Productivity by Experience Level: "The Differential Impact of AI Tools on Software Developer Productivity" - Research on productivity variations across developer experience levels (arXiv preprint)

-

Model Context Protocol Impact: Anthropic MCP documentation and adoption patterns (Anthropic MCP)

-

AI-Generated Code Statistics: "41% of Code is Now AI-Generated" - GitHub Innovation Graph 2025 Report (GitHub Innovation Graph)

-

AI Development Tools Market Analysis: "State of AI in Software Development 2025" - Developer Economics Survey (Developer Economics)